Introduction

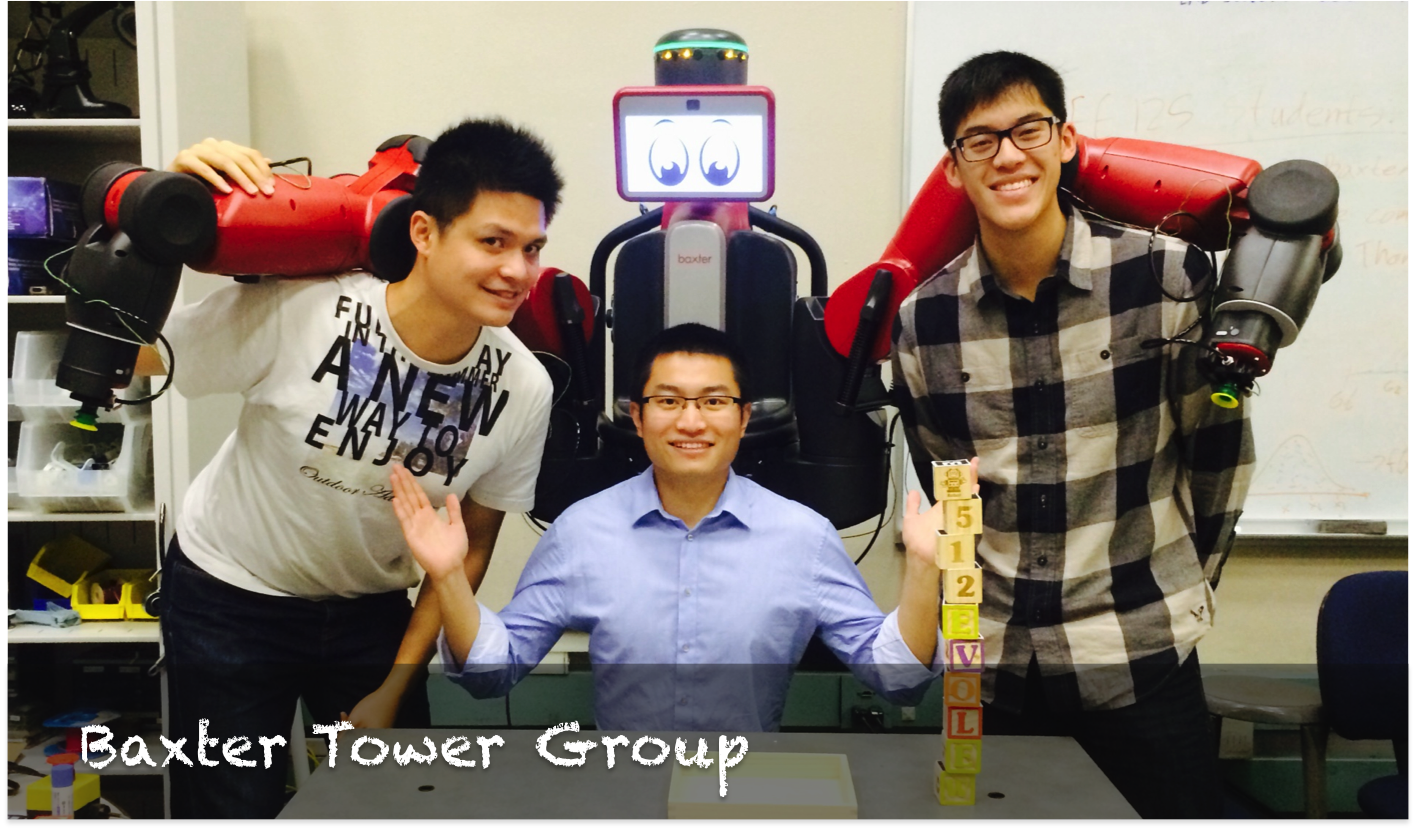

In Baxter Builds a Tower, the end goal is to allow a Baxter robot to autonomously pick up toy blocks in any desired order and then stack them vertically to create a tower.

Baxter Builds a Tower is an interesting project because it combines both robust computer vision and good kinematics/path planning for success. In our traditional mechanical engineering training, we often deal with the latter instead of the former. Therefore, Baxter Builds a Tower is a very attractive project for us because we get the rare chance to work in both realms of robotics at once. In the course of this project, we had to overcome the limitations of off the shelf computer vision packages as well as the hardware limitations in Baxter’s end effector accuracy. We will discuss these issues in great detail in later sections.

The work developed in this project can be useful in car junkyards when cars arrive and need to be stacked/stored. Upon further extension, the work here can also aid in assembly line quality assurance tasks, such as removing defective products from an assembly line and storing them in a separate location for further processing. More importantly, Baxter playing with toy blocks is a great boon towards promoting STEM to the younger audience.

Design

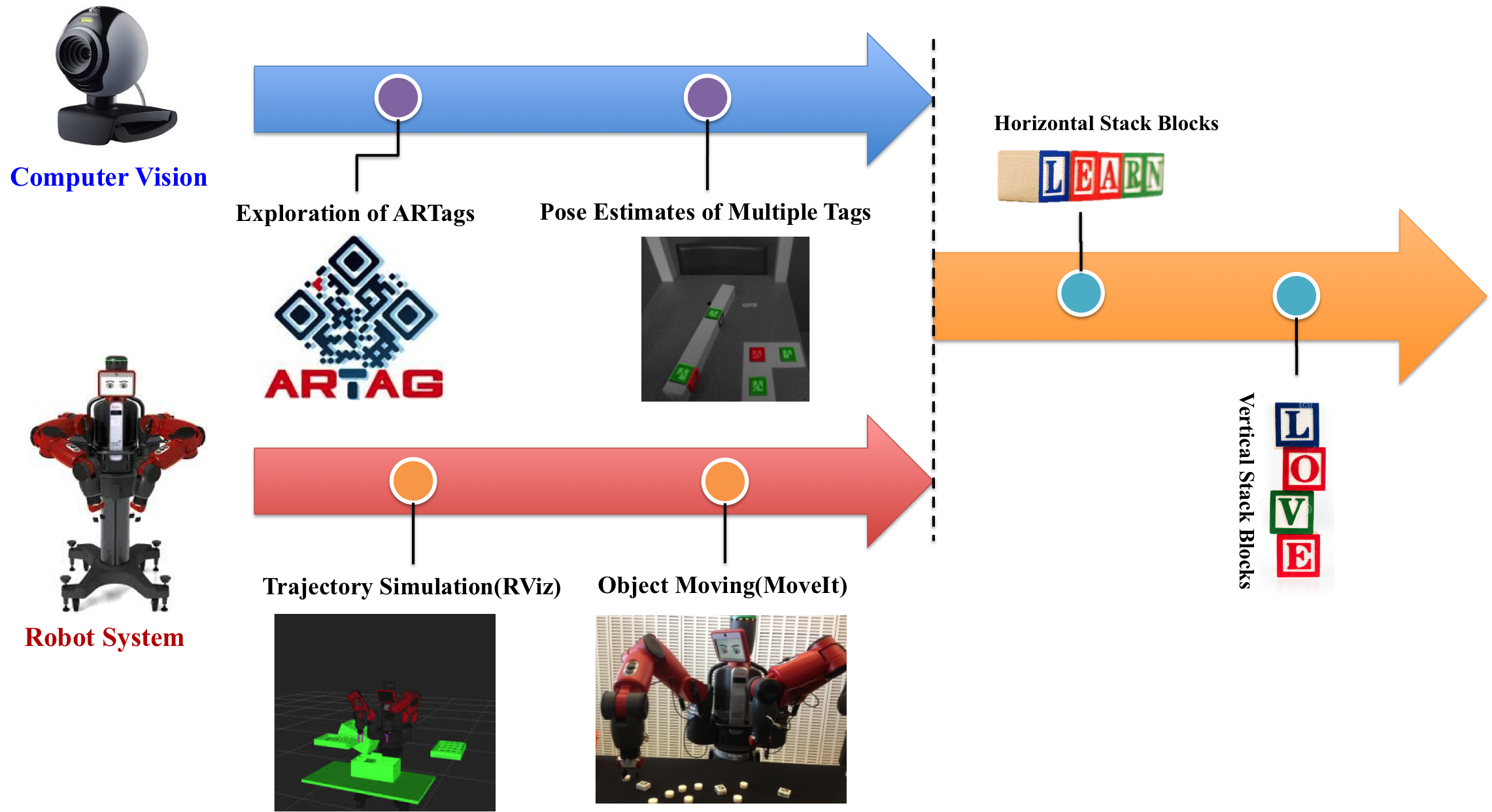

We break down the project into three parts, computer vision, robot system, and project integration. We first investigated in the feature of Baxter and AR Tags, and tried to use some technic which was learnt from EE 215A LAB sections. Then we started to integrate them into a system and tried to tunning some parameters to modify Baxter's performance.

- Criterias

- Identify toy blocks through computer vision

- Retrieve toy blocks with robotic arm

- Place toy blocks down and successively stack blocks vertically into a tower

- Consistently repeatable

- Baxter will autonomously pick up many toy blocks and successively stack them to make a tower

- Challenge

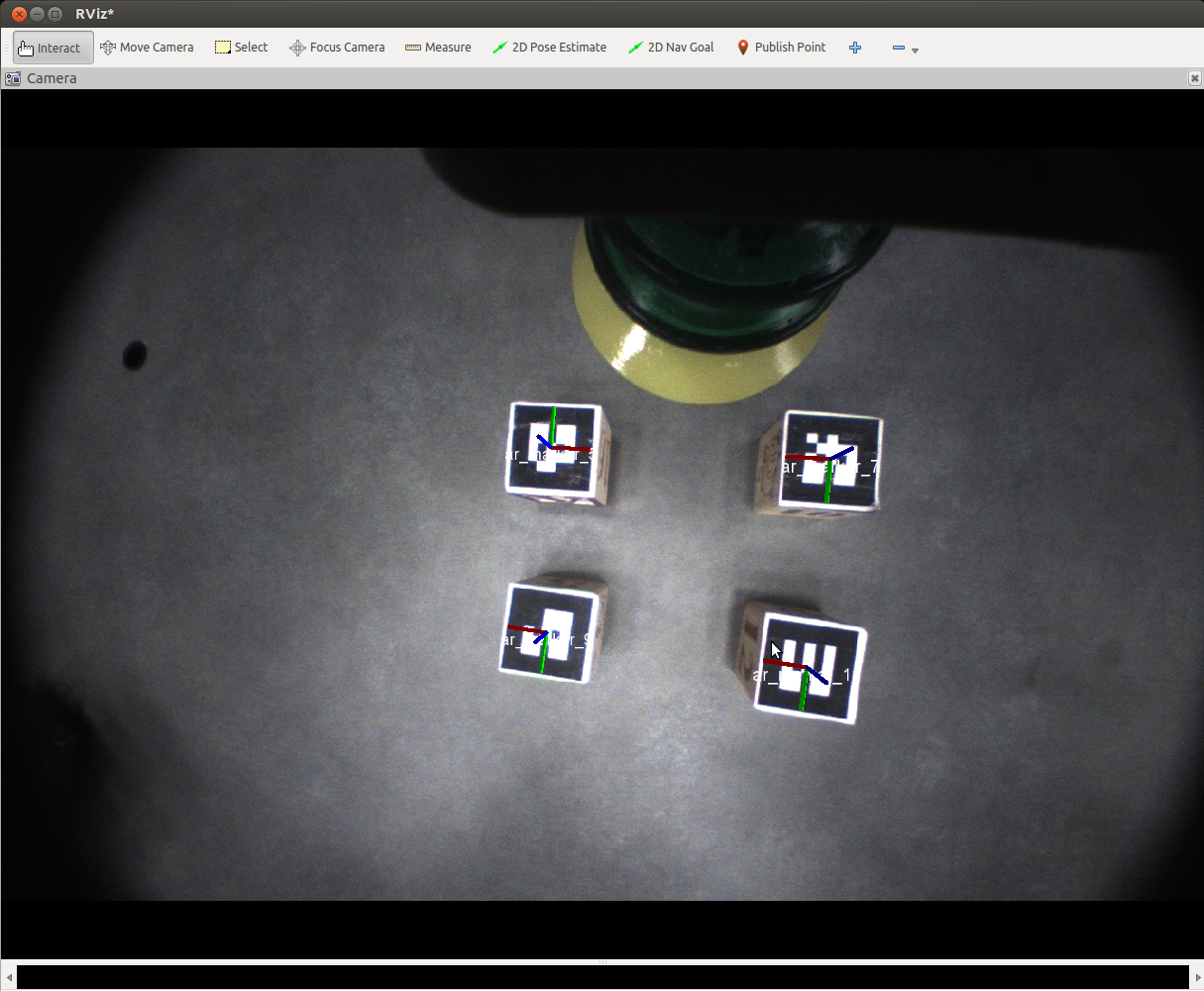

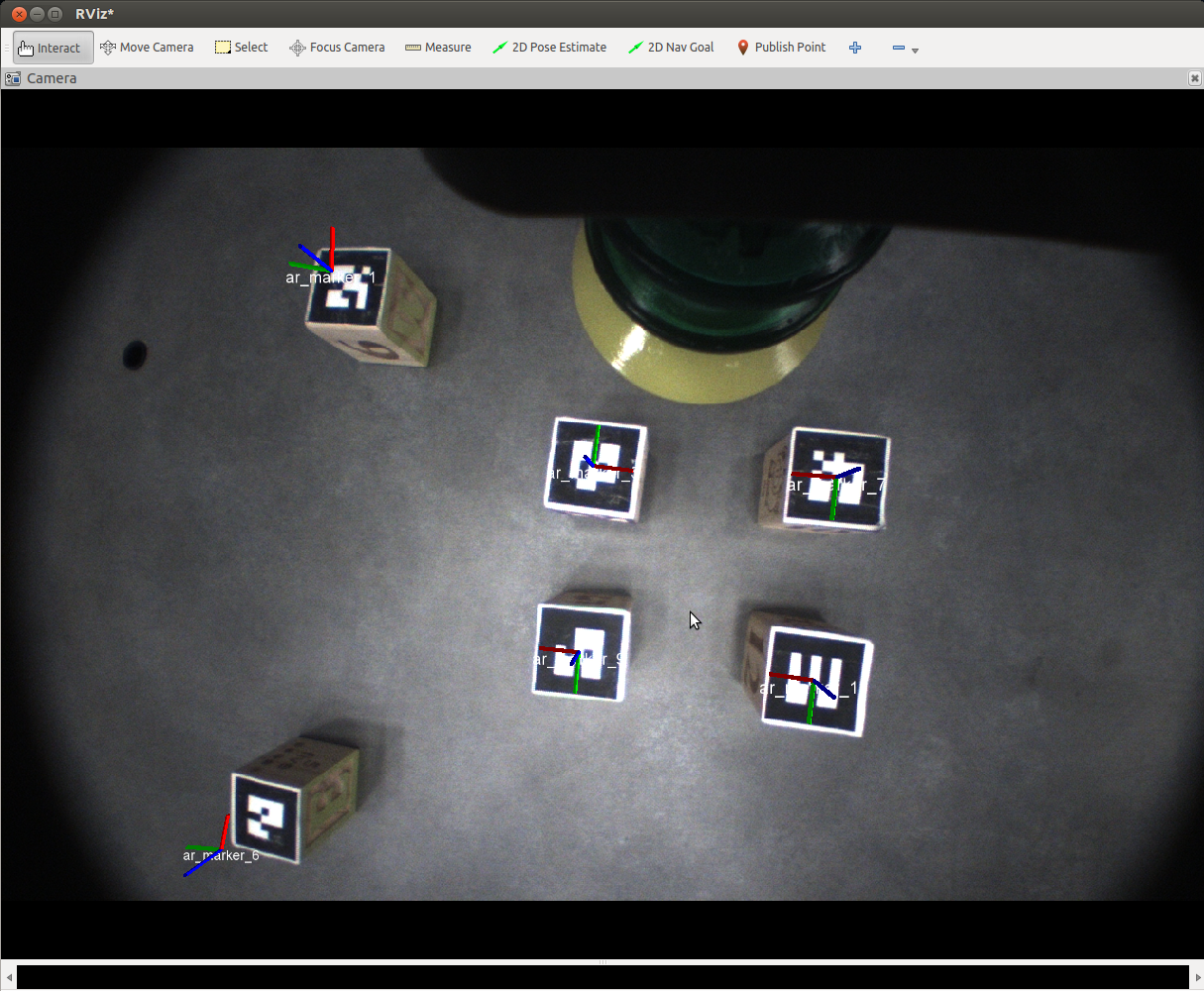

To achieve the above, we decided to use AR Tags and ROS MoveIt pathplanner in order to manipulate toy blocks conveniently sold in various toy stores. We decided to use AR Tags to simplify the task of computer vision, but at the expense of only manipulating objects with large planar faces that can support an AR Tag. Moreover, we had to use cameras located on Baxter’s hand in order to perceive the toy blocks because the head-mounted camera is too far away to accurately detect AR Tags. Consequently, the robot workspace decreased accordingly because of the camera’s decreased field of view. Finally, we intelligently selected the location of toy blocks and arm motion trajectory to minimize the chance of toy block to robot collisions. By doing so, we can avoid importing CAD models of the environment to RVIZ and save on computation time in path planning.

The simplifying design choices made here probably would not suffice in the real world. The decision to use AR Tags definitely constrains the realistic sizes of objects we can manipulate with. Moreover, using hand-mounted cameras, instead of head-mounted or externally mounted, cameras definitely limits the workspace and forces us to be tied to platforms, like Baxter, with hand-mounted cameras. Finally, not allowing Baxter to be cognizant of its environment means that environments would have to be highly controlled and largely static, characteristics that are too idealized compared to the real world.

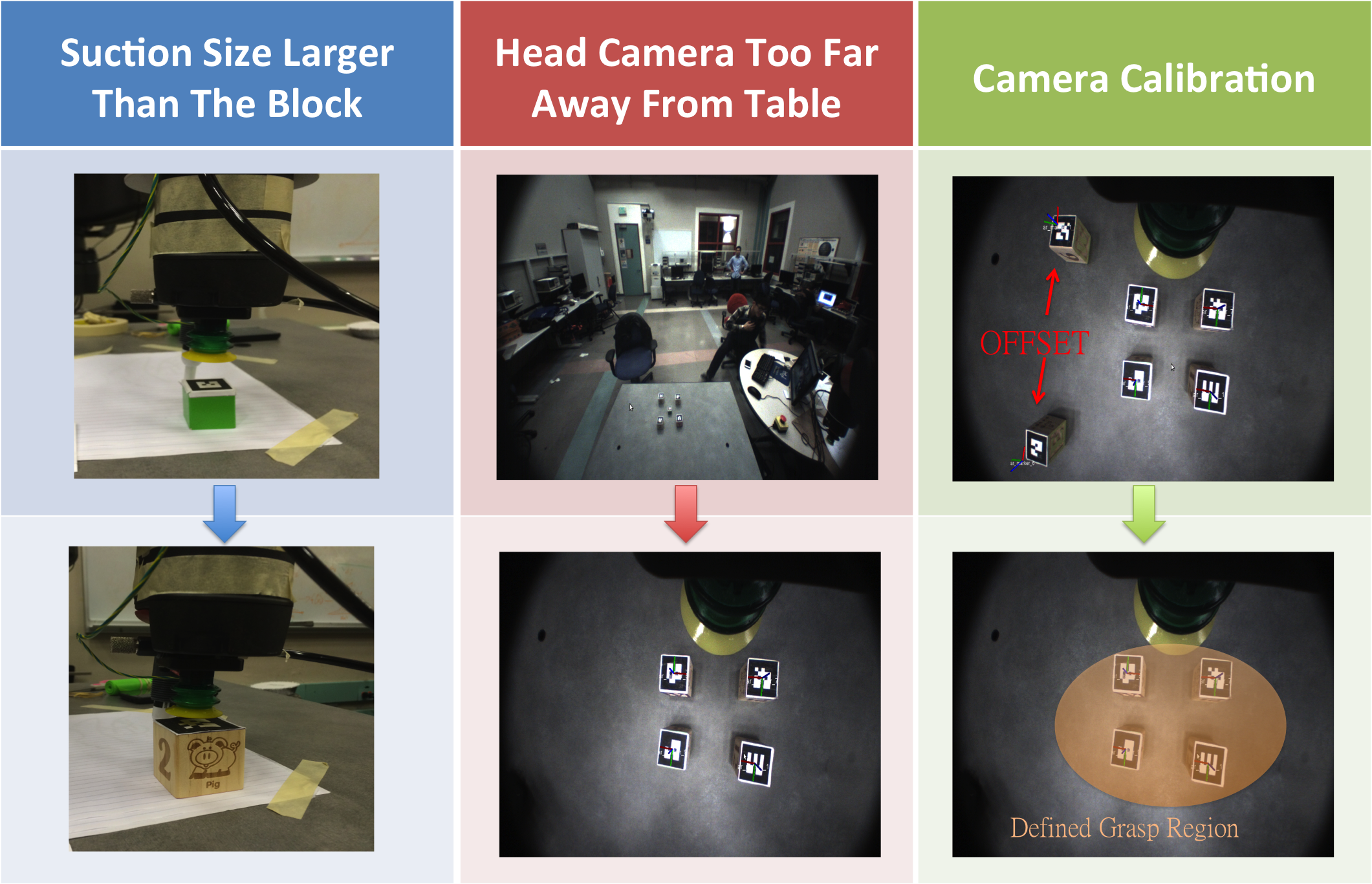

In this project, the hardware limitation leads to three different challenges.

- Suction cup size larger than the block: When the object is smaller than the suction cup, the vacuum gripper will have the air leakage and fail to adhere the block. Since we cannot change the size of suction cup, the easiest solution is changing the size of the blocks.

- Head camera too far away from the table: The original plan is to use the head camera to search the blocks on the tables and the two arms to stack the blocks, which is similar to human's behavior. However, the view from the head camera is too far away from the table so that it could not see any AR Tags. Therefore, we change to use right hand camera to search the blocks on table. In this case, we can easily adjust the camera position to obtain a good AR Tag measurement.

- Camera callibration: If it leaves too far from the center of the camera, the AR Tags will have an offset, which will send a wrong location for Baxter grasping the target. Because we could not callibrate the camera built in Baxter, we define a grasping region, where the offset in the region would be acceptable for the Baxter successfully grasp the blocks.

Implementation

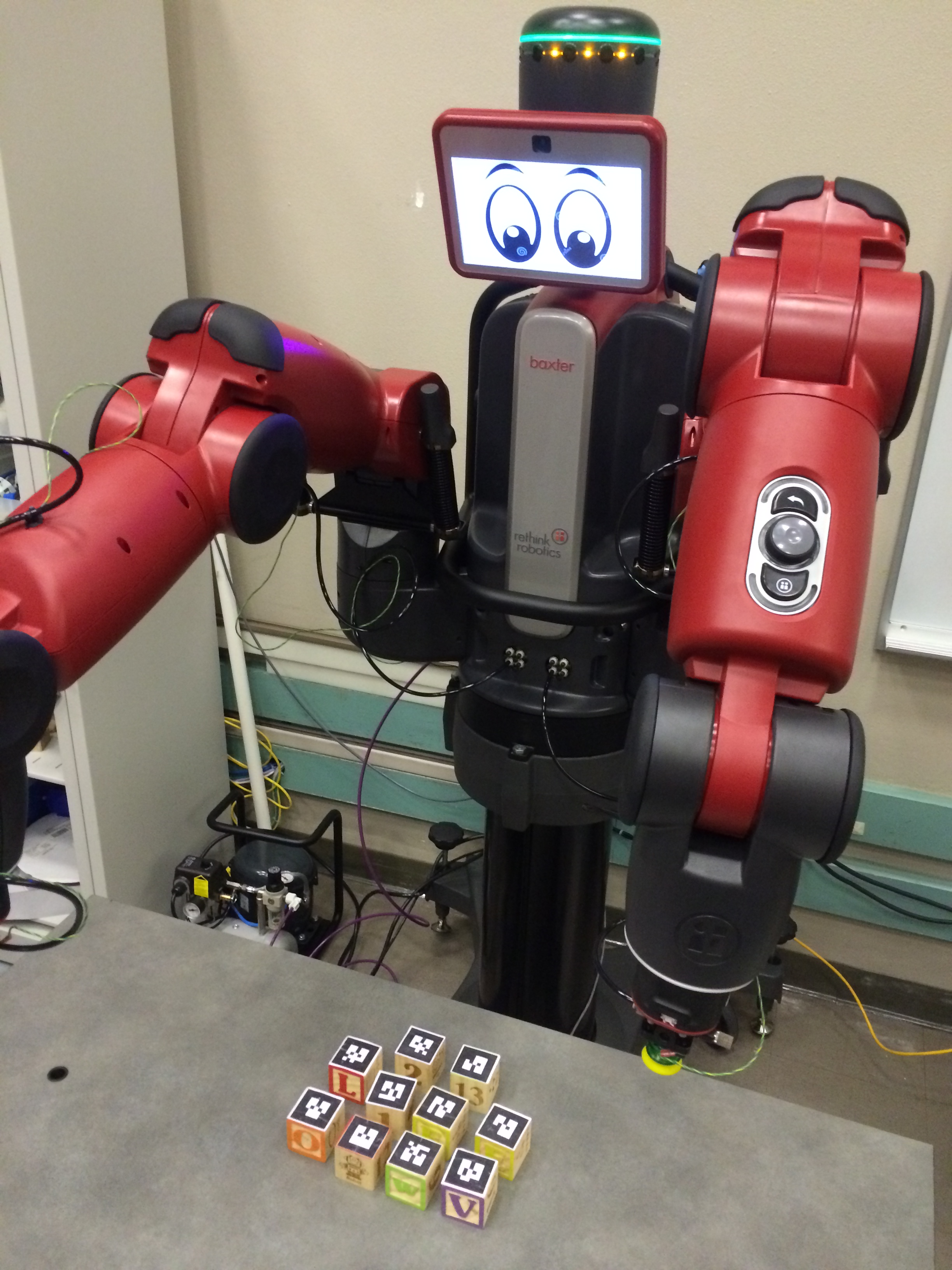

To complete our project, we used the standard Baxter robotic platform with the suction actuator attachment. We manipulated with toy blocks that were 1.5” cubes and made of wood. On one face of each toy block, we adhered AR Tags to enable Baxter to uniquely identify each block.

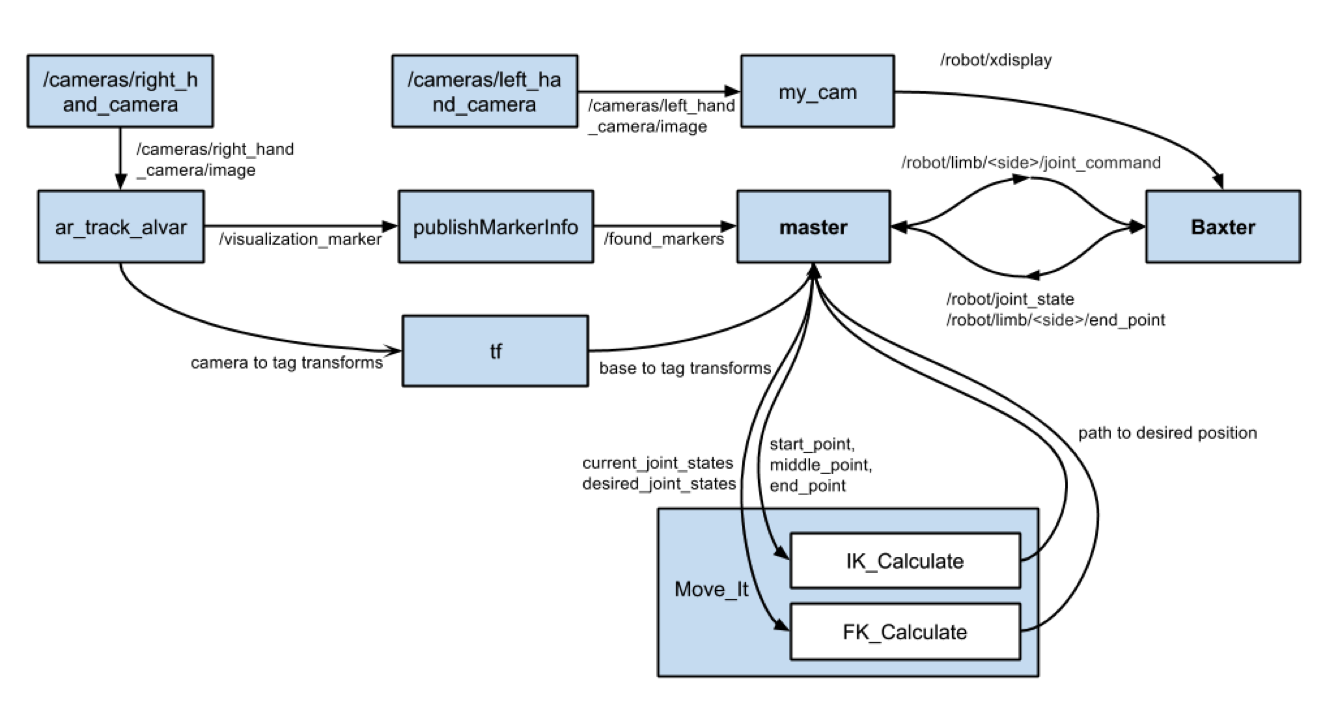

The ROS framework is the software glue that ties all of the hardware together.

- Initialization of AR tracking and MoveIt server as well as robot and camera enabling

- Move Baxter’s right arm over toy blocks to localize toy blocks location in the base frame

- The publisherMarkerInfo node will publish to found_markers with an array of all markers that were identified

- The master node decides which AR Tag to retrieve and computes the transform between the base frame and the AR Tag frame

- The translational component of the transform is used by MoveIt as a destination waypoint.

- The master node also appends a series of intermediate waypoints to help anchor MoveIt’s trajectory so that the arm moves away from the toy block in the z-direction and approaches the stack of toy blocks from the z-direction

- Baxter’s right arm is moved over toy blocks again to get an updated reading of the remaining toy blocks’ location

- Steps 4 to 7 are repeated until all blocks have been retrieved and stacked

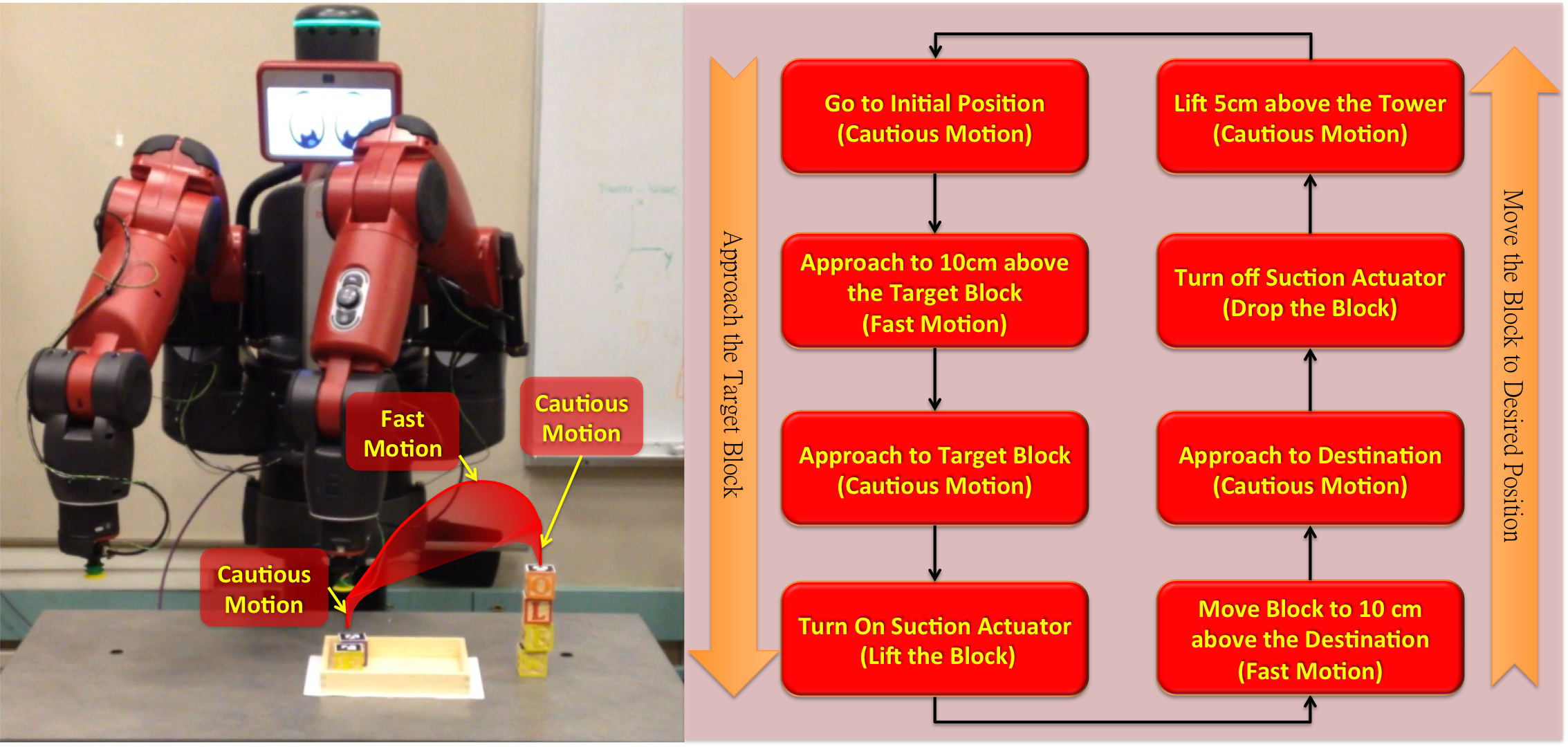

Baxter's path planning can be seperated into the following step:

- Go to the initial position: In order to avoid the tower collapse, we want Baxter leaves a safe distance from the tower so that its motion would not affect the balance of the tower. Therefore, Baxter goes to the initial position with slow velocity before grasping the new block.

- Approach to 10cm above the Target Block: The target pose is acquired from publishMarker-Info. The desired path can be generated from MoveIt. To make Baxter's motion more efficient, we put some via points in the path to avoid the robot detouring. Also, we increase the robot speed in this segment, since we don't have to worry aobut any object hit by the robot.

- Approach to the Target Block: In order to carefully contact on the center of the target, the Baxter uses cautious motion to approach the block.

- Turn on the Suction Actuator: Baxter turns on the vacuum gripper to adhere the block on its end effector.

- Move the Block to 10cm above the Destination: The destination is computed from the position of the last block has been placed. Similar to Step 2, we generate some via points and use fast motion to approach the destination.

- Approach to Destination: To eliminate the placement offeset and minimize the collapse risk, Baxter use very slow speed to approach the destination.

- Turn off the Suction Actuator: Baxter turns off the vacuum gripper to release the block on the desired placement.

- Lift 5cm above the Tower: Baxter leaves from the contact point on the tower, and waits for the next iteration.

Results

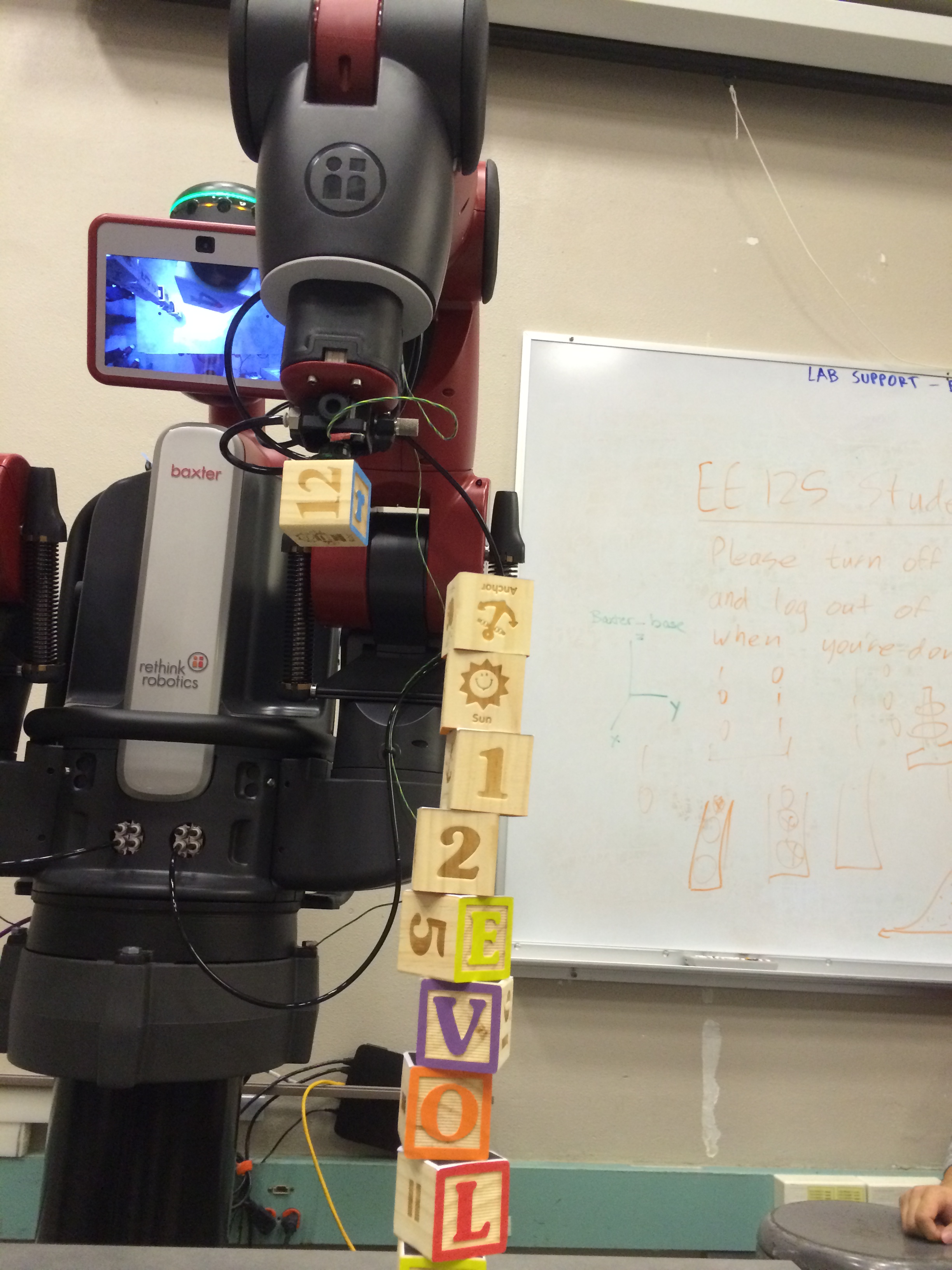

The video below summarizes our work. Concisely, we successfully:

- Implemented autonomously tracking and selection of toy blocks, marked by AR Tags

- Implemented autonomous path planning between toy block retrieval and placement

- Demonstrated an ability to stack toy blocks successively 10 times in a vertical fashion

Conclusion

Compared to our original goals, we have completed most of our objectives except the ability to build more complicated structures, such as a house. This can be attributed to Baxter’s intrinsic positional inaccuracy in its arm which hinders its ability to place toy blocks in a structurally stable fashion.

Moreover, Baxter’s hand-mounted cameras exhibited a fish-eye effect which led to errors in perceiving the location of the AR Tags. This distortion could have been easily fixed by a proper calibration, but Baxter does not readily support such services.

If we had additional time, we would invest in an external camera solution to expand our viewable workspace as well as increase reliability in our sensing through properly calibrated cameras. Plus, we calibrated our robot towards a particular table height (i.e. the Cory 119 table) in order to keep our problem tractable. Future work should include robustness towards a variety of table heights.

Team

Hsien-Chung Lin

Hsien-Chung Lin is a Mechanical Engineering student from Taiwan. His international student life began in Aug. 2013. Now he is exploring in the robot control research. He is a tall guy and loves playing basketball. He also likes to cook and hangout with friends.

Te Tang

Te Tang is a student from Mechanical Engineering Department, majored in Control. He is interested in everything that can automatically move. His small dream is to DIY a robot. His large dream is to start up a company which designs, produces and promotes robots, by the people, to the people, for the people.

Dennis Wai

Dennis Wai is a Mechanical Engineering student that is a little too interested in Electrical Engineering and Computer Science. He likes robots and often can be found volunteering with Pioneers in Engineering, a student group focused on delivering robotics experience to underserved Bay Area communities. For this other interests, you can check out his website.

Additional Materials

- Source Code on Github

- Toy blocks

- ROS